https://www.brentozar.com/blog/

https://www.erikdarlingdata.com/blog/

SQL Server Assessment Using SSMS and SSRS

https://blog.sqlauthority.com/

https://jmmv.dev/2021/04/always-be-quitting.html

Best Practices:

7. Instant File Initialization (IFI) – SQL Server Service account to be granted Perform Volume Maintenance Tasks permissions in windows

8. Blocking – Allow Snapshot Isolation & Read committed Snapshot Isolation. Make sure to allow higher storage for TempDB before you turn RCSI ON.

9. Virtual Log Files:

10. Make Friends with Network & Storage Admins – Storage could be the problem and network

11. Over Provisioned VMs & Host

12. COMPRESSION

13. STATISTICS

14. INDEXES

15. EXECUTION PLANS

Traditionally questions about how much memory SQL Server needs were aimed at how to appropriately set the ‘max server memory’ sp_configure option in SQL Server, and in my book the recommendation that I make is to reserve 1 GB of RAM for the OS, 1 GB for each 4 GB of RAM installed from 4–16 GB, and then 1 GB for every 8 GB RAM installed above 16 GB RAM. Then monitor the Memory\Available MBytes performance counter in Windows to determine if you can increase the memory available to SQL Server above the starting value. (Note: This counter should remain above the 150-300MB at a bare minimum, Windows signals the LowMemoryResourceNotification at 96MB so you want a buffer, but I typically like it to be above 1GB on larger servers with 256GB or higher RAM) This has typically worked out well for servers that are dedicated to SQL Server. You can also get much more technical with determining where to set ‘max server memory’ by working out the specific memory requirements for the OS, other applications, the SQL Server thread stack, and other multipage allocators. Typically this would be ((Total system memory) – (memory for thread stack) – (OS memory requirements ~ 2-4GB) – (memory for other applications) – (memory for multipage allocations; SQLCLR, linked servers, etc)), where the memory for thread stack = ((max worker threads) *(stack size)) and the stack size is 512KB for x86 systems, 2MB for x64 systems and 4MB for IA64 systems. The value for ‘max worker threads’ can be found in the max_worker_count column of sys.dm_os_sys_info. However, the assumption with either of these methods is that you want SQL Server to use everything that is available on the machine, unless you’ve made reservations in the calculations for other applications.

As more shops move towards virtualizing SQL Servers in their environment this question is more and more geared towards determining what is the minimum amount of memory that a SQL Server will need to run as a VM. Unfortunately there is no way to calculate out what the ideal amount of RAM for a given instance of SQL Server might actually be since SQL Server is designed to cache data in the buffer pool, and it will typically use as much memory as you can give it. One of the things to keep in mind when you are looking at reducing the RAM allocated to a SQL Server instance is that you will eventually get to a point where the lower memory gets traded off for higher disk I/O access in the environments.

If you need to figure out the ideal configuration for SQL Server memory in an environment that has been over provisioned the best way to try to go about doing this is start off with a baseline of the environment and the current performance metrics. Counters to begin monitoring would include:

Typically if the environment has excess memory for the buffer pool, the Page Life Expectancy value will continue to increase by a value of one every second and it won’t typically drop off under the workload because all of the data pages end up being cached. At the same time, the number of SQL Server:Buffer Manager\Page reads/sec will be low after the cache ramp up occurs which will also correspond to a low value for Physical Disk\Disk Reads/sec.

Once you have your baseline for the environment, make a change to the sp_configure ‘max server memory’ option to reduce the size of the buffer pool by 1GB and then monitor the impact to the performance counters after things stabilize from the initial cache flushing that may typically occur when RECONFIGURE is run in the environment. If the level of Page Life Expectancy remains acceptable for your environment (keeping in mind that a fixed target of >= 300 is ridiculous for servers with large amounts of RAM installed), and the number of SQL Server:Buffer Manager\Page reads/sec is within what the disk I/O subsystem can support without performance degradation, repeat the process of reducing the sp_configure value for ‘max server memory’ by 1GB and continuing to monitor the impact to the environment.

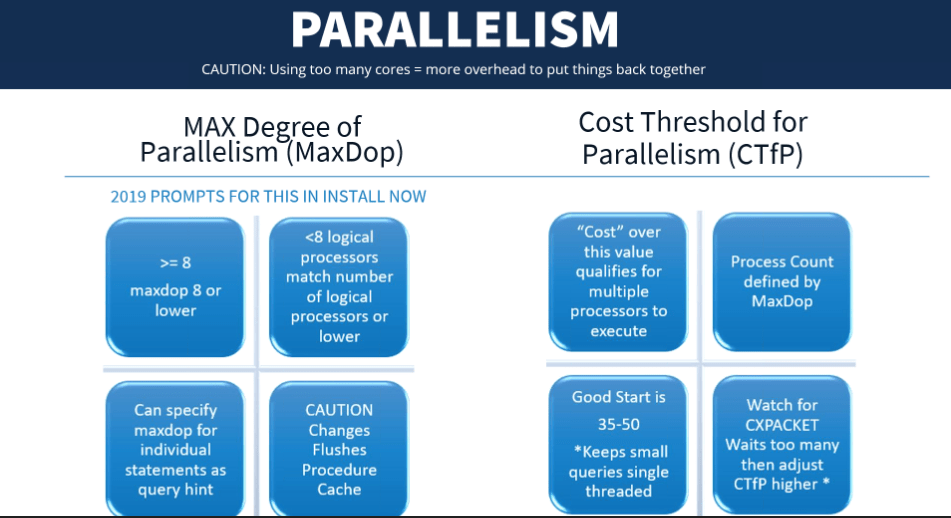

SQL Server waits provide information about what the database engine is waiting on while extracting queries on a monitored server. While there are many different wait types, CXPACKET waits are a commonly misinterpreted data measurement and require additional clarification.

CXPACKET waits often result when parallelized query threads have an unequal workload, causing a block on the entire query until the longest-running thread is complete. Note that the trigger for the CXPACKET wait occurs not with parallelism, but with the distribution of work on the individual threads.

While you can decrease the number of CXPACKET waits by reducing the MAXDOP on the monitored server, this is often not a recommended course of action as it may cause the wait to disappear and the underlying query to take longer to execute. For example, this issue may result from large parallel table scans, which you can verify using other wait types on the server, such as PAGIOLATCH_XX. This may also result from out-of-date statistics that cause a bad query plan and the unequal workload among threads.

Before adjusting MAXDOP, review your indexes and statistics for accuracy and make sure there are no other underlying issues. If you choose to adjust MAXDOP, recommended settings include 4, 2, or 1. For a NUMA system, use the number of cores available in a single NUMA node. You may want to make these updates on the single query instead of the entire instance to avoid any additional problems.

This happens when SQL server is done processing all the data it needs to output and waiting for the client/application to consume the data. The waits typically could come from network latency, which causes the data to be processed by the client slowly across the network. It could also happen if the client/application on the other end is consuming the data on row-by-row basis (RBAR operation).

Measures the pressure on the physical hard disk array.

Equivalent PerfMon counter: Logical Disk: Avg. Disk Queue Length

Explanation: This is the average number of physical read and write requests queued for a physical disk.

Guideline values: Due to changes in technology, such as virtualization, disk and controller technology, SANs and more, this counter is no longer a good indicator of I/O bottlenecks. A better measure of I/O bottlenecks is Avg. disk read time and Avg. disk write time.

Check also: Disk avg. read time and Disk avg. write time .