sqlserverfast.com

Site run by Hugo Kornelis

sqlserverfast.com

Site run by Hugo Kornelis

How often do you update your statistics?

Here is my general advise: https://vinayrahulare.wordpress.com/2020/12/08/database-maintenance-best-practices/

Everyone’s criteria is different for how often you want to update the statistics.

If you like to find outdated statistics. Here is an useful script which shows you the last time your statistics were updated and the number of modifications made on a column. Based on these two parameters you can decide if you would like to update the statistics for a particular table.

SELECT DISTINCT

OBJECT_NAME(s.[object_id]) AS TableName,

c.name AS ColumnName,

s.name AS StatName,

STATS_DATE(s.[object_id], s.stats_id) AS LastUpdated,

DATEDIFF(d,STATS_DATE(s.[object_id], s.stats_id),getdate()) DaysOld,

dsp.modification_counter,

s.auto_created,

s.user_created,

s.no_recompute,

s.[object_id],

s.stats_id,

sc.stats_column_id,

sc.column_id

FROM sys.stats s

JOIN sys.stats_columns sc

ON sc.[object_id] = s.[object_id] AND sc.stats_id = s.stats_id

JOIN sys.columns c ON c.[object_id] = sc.[object_id] AND c.column_id = sc.column_id

JOIN sys.partitions par ON par.[object_id] = s.[object_id]

JOIN sys.objects obj ON par.[object_id] = obj.[object_id]

CROSS APPLY sys.dm_db_stats_properties(sc.[object_id], s.stats_id) AS dsp

WHERE OBJECTPROPERTY(s.OBJECT_ID,'IsUserTable') = 1

AND (s.auto_created = 1 OR s.user_created = 1)

ORDER BY DaysOld;

When you create a temp table, you might think that it is exclusive to your session. However, it’s not. Other sessions can reuse your table structure and statistics.

Temp tables in Stored procs: Here is what happens when you repeatedly call a stored procedure which uses temp tables. The table statistics from one table of similar structure gets re-used for the next session. How do you solve this problem?

First, you could execute the stored procedure with the WITH RECOMPILE option to produce better plans.

Second, you could add OPTION(RECOMPILE) at the end of the select query. Second option is a better one than the first since the WITH RECOMPILE causes the entire stored procedure to recompile. Whereas OPTION(RECOMPILE) only recompiles the select statement.

Third, to handle the parameter sniffing problem is to add UPDATE STATISTICS command to the stored procedure instead of OPTION(RECOMPILE). The statistics we want to update are created during compilation or recompilation of the SELECT statement, suggesting the UPDATE STATISTICS should come after the SELECT. Still we dont get accurate statistics.

Finally, we add both UPDATE STATISTICS as well as OPTION(RECOMPILE). This finally takes care of the parameter sniffing issues we were seeing earlier. It’s a success!

This goes to show that Cardinality estimation is a tough problem – try using the statistics histograms yourself to predict how the values in the temp table will join to the histogram on the same column for the much larger table. You may find the task of matching values to values using RANGE_ROWS, EQ_ROWS and so on is really not an easy one at all.

Temporary table Caching Internals:

Key point to note is that CREATE TABLE and DROP TABLE do not create and drop temp tables in a stored procedure, if the temporary object can be cached. Instead, the temporary object is renamed to an internal form when DROP TABLE is executed, and renamed back to the same user-visible name when the corresponding CREATE TABLE is encountered on the next execution.

In addition, any statistics that were auto-created on the temporary table are also cached. This means that statistics from a previous execution remain when the procedure is called again.

CREATE OR ALTER PROCEDURE dbo.TempTableDemo

AS

BEGIN

CREATE TABLE #Temp (col1 integer NULL);

SELECT ObjectID =

OBJECT_ID(N'tempdb..#Temp');

DROP TABLE #Temp;

END;

GO

EXECUTE dbo.TempTableDemo;

EXECUTE dbo.TempTableDemo;

EXECUTE dbo.TempTableDemo;

GO

Example output:

This is quite different from how a permanent table behaves. With a permanent table, CREATE and DROP definitely create and drop a table on each execution of the procedure. The table is new each time, new statistics and plans are created on each execution.

It allows temporary tables an opportunity to cache plans and temporary objects for reuse, which is not possible with permanent table.

Recompilation Thresholds

Cached query plans include a Recompilation Threshold (RT) for statistics considered interesting by the optimizer while compiling the plan.

The query plan also records the cardinality of tables and indexed view referenced by the plan, at the time the plan is compiled.

Generally, if the column modification counter (colmodctr) for interesting statistics change by RT or more, the query recompiles.

Similarly, if the table cardinality has changed by RT or more compared with the value stored at the time the cached plan was created, the query plan also recompiles.

The value of RT depends on the type of table (permanent or temporary) and the number of rows (n) in the table or indexed view at the time of compilation.

The following summary of RT values has been taken from a Microsoft technical article.

Generally, although queries referencing permanent tables recompile when interesting statistics modification counters change by RT or more, this is not the case for cached temporary tables in stored procedures, which only recompile when table cardinality changes by RT or more, unless OPTION(RECOMPILE) is used, when statistics counters come back into play.

This could be a bug in SQL server, but on the other hand, it is reasonable that a cached temporary table should not cause a recompilation simply because the procedure is called a number of times with the same parameter, with the number of modifications accumulating on each call to eventually pass RT.

Summary

Temporary tables in stored procs have couple of unexpected behaviors:

These above behaviors are not intentional and they have been this way for a long time and not very likely to be changed in the foreseeable future.

Are you likely to be affected by these issues?

A temporary table containing wide range of numbers of rows returns per execution is not likely to be affected. If the recompilation threshold is passed, chances are you will get good statistics and any cached plan with recompile without intervention.

For a cached plan, when the temp table contains 20,000 rows the default threshold is 500 + 0.2 * 20,000 = 4500 rows. This means the next execution with more than 24,500 rows or less than 15,500 rows will pass the threshold test.

Avoiding temporary table caching removes the risk of stale statistics, but a cached plan may still be used unless the RECOMPILE query hint is used. Avoiding temporary table caching will also tend to increase contention on tempdb, and can be difficult to enforce in practice.

Procedures that routinely store relatively small result sets and use cached temporary tables are probably most at risk. This is a common scenario where temporary tables are often used for smaller sets.

General preference should be still to opt for simpler relations queries and, where necessary, for temporary tables over table variables as a starting point.

There are 3 types of statistics in a SQL Server database

General Facts about statistics

General Advice: Recommend to leave the Auto Stats option for the database to ON, and if there is a particular case where you are seeing bad query plans on certain tables, just disable Auto Stats for that table and enable the manual statistics job for that table.

How does the Auto Update stats Algorithm work you may ask?

So the Auto Update stats will fire for every 500 + 20% change in table rows. Of course, we have an improved algorithm in version SQL 2012 and above which is SQRT(1000 * Table rows). This is much better than the older algorithm.

When it fires, it will use the default sampling rate and here is the algorithm how it calculates the sampling rate.

Example: If we have 1 million rows, it would use a sampling rate of 30% but when the number of rows increase to 8 million, it would reduce the sampling rate to 10%. These sampling rates are not under the DBA’s control but optimizer decides it.

If you ever want to find out which table has been modified the most since the last statistics update. You can do so using the DMV sys.dm_db_stats_properties, simply look at the column modification_counter. This column contains the total number of modifications for the leading statistics column since the last time statistics were updated.

SELECT obj.name, obj.object_id, stat.name, stat.stats_id,

last_updated, modification_counter

FROM sys.objects AS obj

INNER JOIN sys.stats AS stat ON stat.object_id = obj.object_id

CROSS APPLY sys.dm_db_stats_properties(stat.object_id, stat.stats_id) AS sp

ORDER BY modification_counter DESC

The column last_updated suggests when was the statistics last updated and modification counter column indicates how many times the column was updated since the last statistics updated.

Please note that if any of your processes drop and recreate tables in the nightly ETLs, you would see the modification_counter as zero since the statistics are dropped when you drop a table and create it.

sys.dm_db_stats_properties is a newer replacement to the uber popular DBCC SHOW_STATISTICS command.

If you have auto-update statistics enabled for the database configuration, in most case, you do not need to worry about these details.

I figured i will store this on my blog since each time i scramble to google for the script to move the files. So here we are:

SELECT 'ALTER DATABASE tempdb MODIFY FILE (NAME = [' + f.name + '],'

+ ' FILENAME = ''T:\TempDB\' + f.name

+ CASE WHEN f.type = 1 THEN '.ldf' ELSE '.mdf' END

+ ''');'

FROM sys.master_files f

WHERE f.database_id = DB_ID(N'tempdb');

Copy the output of the above script and paste it in SSMS. I kind of like using the script to dynamically generate the script I need to run. Sometimes, depending of available space, I may have to move log file to another drive.

Please be sure to verify the file path used above. Otherwise, you would fail to start up the SQL server instance after you bounce the services.

If you need up in that situation. Here is what you do:

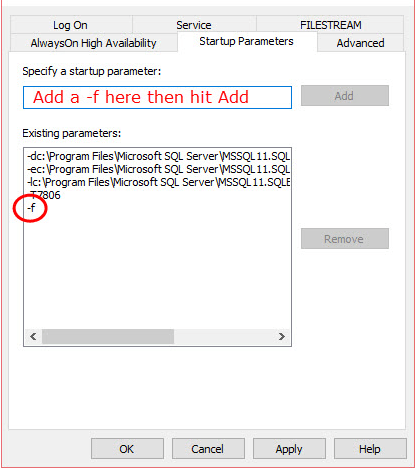

Re-start the instance in safe mode (-f startup parameter) and move tempdb. Then re-start without the parameter.

Here is an example of what that would look like: